The Power of Community

Educators are passionate about providing opportunities for all students to thrive. As educators ourselves, we share that passion. That’s why we connect professionals around the world in a community that learns, grows, and improves—together.

This powerful community—and the perspective it brings—enhances our professional practice so we can bring you proven solutions that enhance school quality.

What is Cognia?

We are a global network of enthusiastic educators here to help you strengthen your schools. Our holistic approach to continuous improvement encompasses accreditation and certification, assessment, professional learning, and customized improvement services. Like you, we want all students to have the opportunities that knowledge brings. Are you ready to lead your education community to new levels?

Top News and Events at Cognia

New Standards

Leader Chat: Mastering Yourself…So You Can Master Your World with Dr. Craig Dowden

The Source: A digital magazine focused on education thought leadership

Your success is our mission

Cognia is a forward thinking nonprofit organization laser-focused on improving educational opportunities for all learners. When you work with us, you can expect:

Unmatched expertise to help you achieve visionary goals

Fresh perspective to help you link student and school performance

A global network of educators dedicated to your priorities

Services and solutions backed by well-regarded research

Be inspired

Districts and schools around the country and the world use Cognia solutions to help all learners realize their potential. Read their stories.

Introducing a professional learning community that leaves “sit-and-get” PD in the rearview mirror.

Put this exciting new professional learning community into action today.

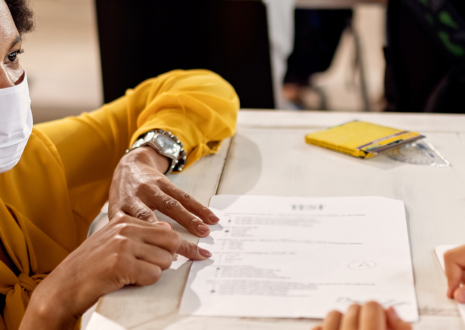

Activate dynamic improvement rooted in expert evaluation

One source, all the know-how you need

Our staff members and volunteers bring you successful practices and strategic ideas that elevate school and student performance.

Your needs set our direction

Every school and organization is unique. We offer a range of resources and services to help you pinpoint and address your priorities, allowing you to apply your successes to the next challenge.

Your priorities are our priorities

Because we’re a nonprofit organization, we answer to our clients, volunteers, staff members, and ultimately students. You’ll see that we’re focused on learners, not on the bottom line.

We know education firsthand

We know states, education systems, and schools because we’ve been educators like you, and because we partner with your staff at all levels. When you work with Cognia, you work directly with someone who knows your environment and can respond to your needs.

We bring expertise from around the globe

Working with educators across the country and around the world, we identify what works. Our research and our reach means that you get proven practices and innovative ideas to help you raise learning to higher levels.

We know schools because we’re in schools

Our global network of educators guides you to diagnose challenges and implement change.

Serving educators around the world

Education institutions worldwide look to Cognia for proven practices, insightful research, and strategies for the future.

Your partner in education

Our expertise spans a wide range of educational issues. But when it comes to what’s most important in your school or region, you’re the authority. That’s why we partner with you to help reach your goals.